AGENTIC AI

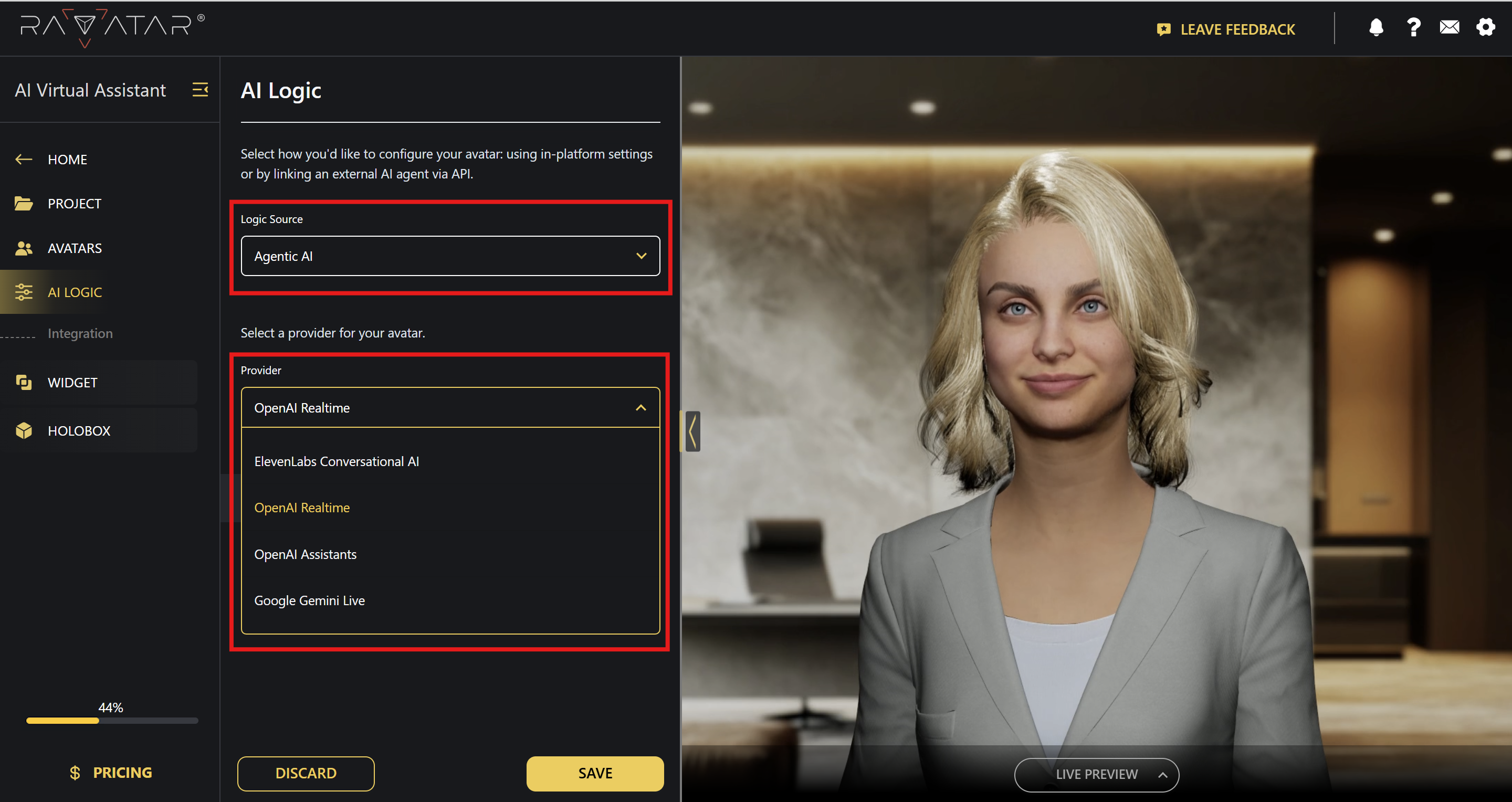

Сhoosing Agentic AI as the avatar’s logic source lets you connect a third-party AI agent to define your avatar’s functions and behavior, instead of configuring this manually via built-in Studio utilities (such as Role, LLM, and TTS).

The agent’s logic and parameters should be managed solely on the provider’s side. In the Studio interface, you only link the ready-made agent through an API key and choose from the available configurations it provides (such as model, voice, or other options).

Once Agentic AI is selected, a new Provider field appears, where you can choose from the currently supported AI services:

- OpenAI Assistants

- OpenAI Realtime

- ElevenLabs Conversational AI

- Google Gemini Live

This list is subject to future extension. If the Agentic AI service you’re looking for isn’t listed, contact us at support@ravatar.com. We’ll review the request and consider its integration based on relevance and technical feasibility.

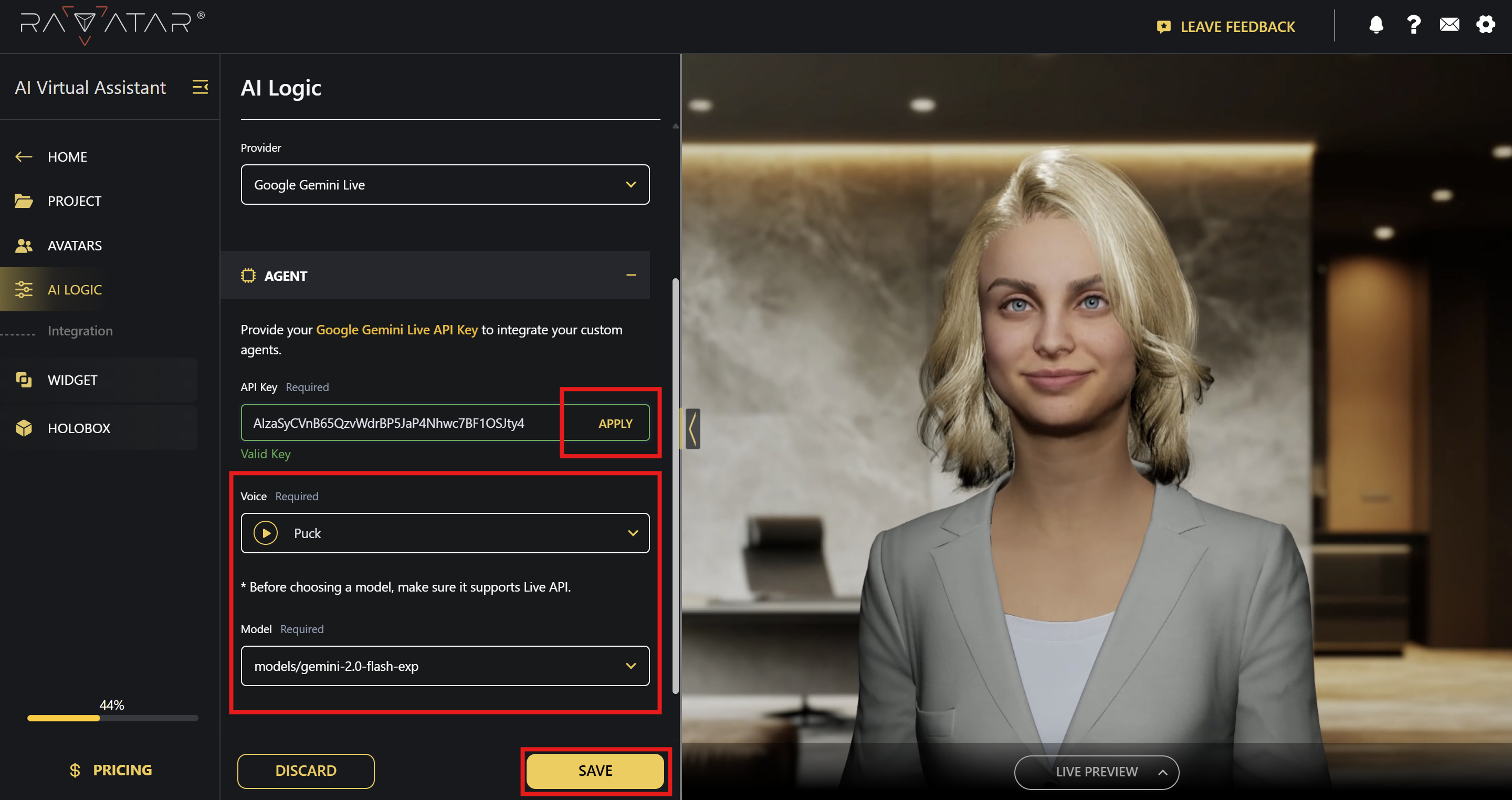

After you’ve chosen the required provider from the list, refer to the AGENT panel below and enter your API key from that service’s dashboard (a direct link to the appropriate account settings is included in the description text above the field). Then click Apply.

Once your key is successfully validated, you’ll see additional configuration drop-downs appearing, which may vary depending on the selected AI service – for example, Voice and Model for OpenAI Realtime and Google Gemini Live, or Assistant for OpenAI Assistants.

After you specify all the required parameters, Save the changes to your project.

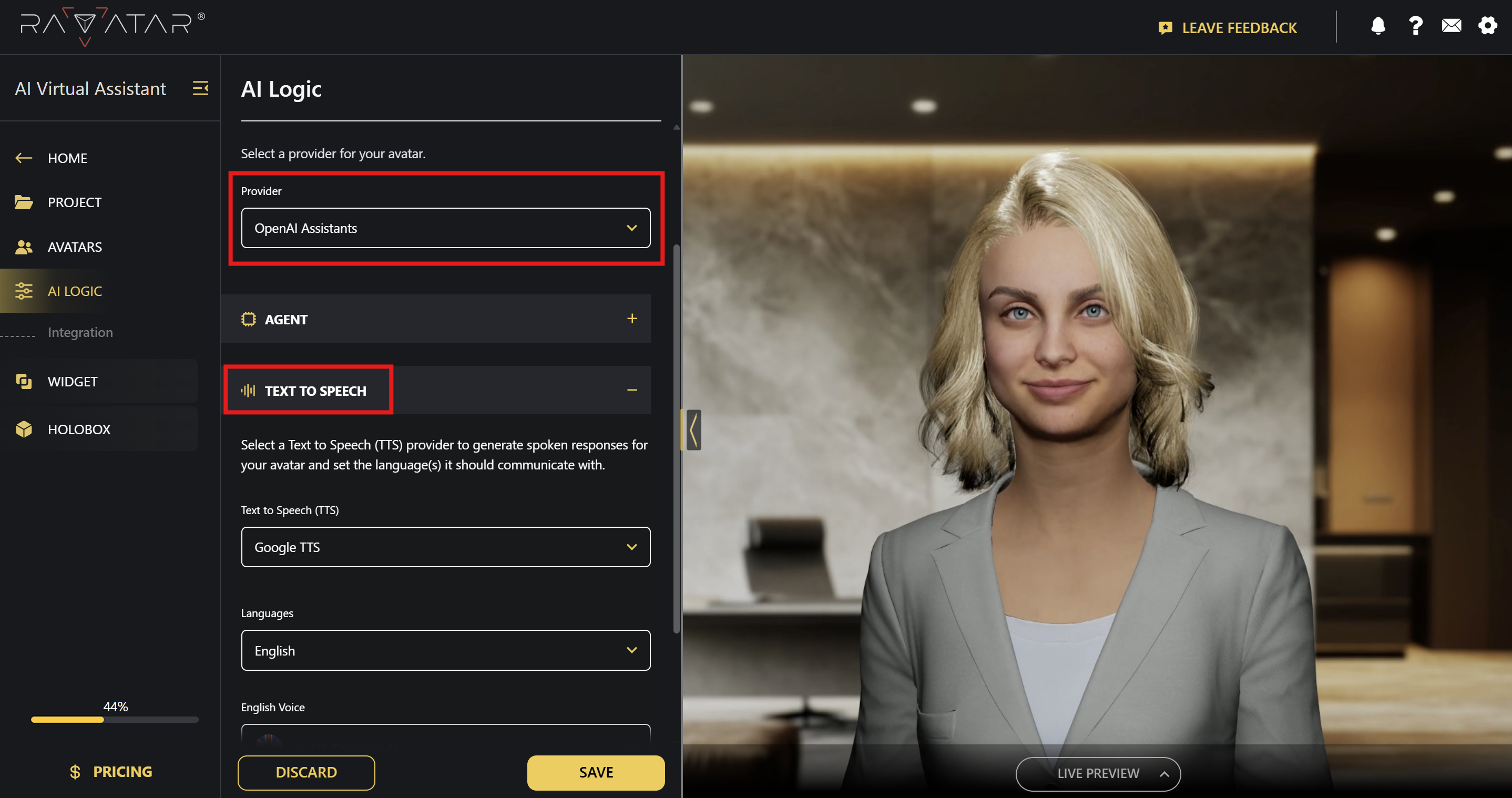

For OpenAI Assistants, an additional Text to Speech settings panel will be shown below – it is required to ensure your AI avatar can communicate with voice, since this AI service is entirely text-based.

See the TTS guide for details on this configuration, and click Save after you finish.

From this point on, your AI avatar will operate according to the predefined logic of the connected AI agent. You can test it in a live conversation using the Preview Display panel.